Deployment and executing migrations

At this point our droplet is set up with all the software and user accounts that we need, so let’s move on to deploying and running our API application.

At a very high level, our deployment process will consist of three actions:

- Copying the application binary and SQL migration files to the droplet.

- Executing the migrations against the PostgreSQL database on the droplet.

- Starting the application binary as a background service.

For now, we’ll just focus on steps 1 and 2, and tackle running our application as a background service in the next chapter.

Let’s begin by creating a new make production/deploy/api rule in our makefile, which we will use to execute these first two steps automatically. Like so:

... # ==================================================================================== # # PRODUCTION # ==================================================================================== # production_host_ip = "161.35.71.158" ## production/connect: connect to the production server .PHONY: production/connect production/connect: ssh greenlight@${production_host_ip} ## production/deploy/api: deploy the api to production .PHONY: production/deploy/api production/deploy/api: rsync -P ./bin/linux_amd64/api greenlight@${production_host_ip}:~ rsync -rP --delete ./migrations greenlight@${production_host_ip}:~ ssh -t greenlight@${production_host_ip} 'migrate -path ~/migrations -database $$GREENLIGHT_DB_DSN up'

Let’s quickly break down what this new rule is doing.

First, it runs the rsync command to copy the ./bin/linux_amd64/api executable binary (the one specifically built for Linux) and the ./migrations folder into the home directory for the greenlight user on the droplet.

Then it uses the ssh command with the -t flag to run these database migrations on our droplet as the greenlight user with the following command:

'migrate -path ~/migrations -database $$GREENLIGHT_DB_DSN up'

Because the $ character has a special meaning in makefiles, we are escaping it in the command above by prefixing it with an additional dollar character like $$. This means that the command that actually runs on our droplet will be 'migrate -path ~/migrations -database $GREENLIGHT_DB_DSN up', and in turn, the migration will be run using the droplet environment variable GREENLIGHT_DB_DSN.

It’s also important to note that we’re surrounding this command with single quotes. If we used double quotes, it would be an interpreted string and we would need to use an additional escape character \ like so:

"migrate -path ~/migrations -database \$$GREENLIGHT_DB_DSN up"

Alright, let’s try this out!

Go ahead and execute the make production/deploy/api rule that we just made. You should see that all the files copy across successfully (this may take a minute or two to complete depending on your connection speed), and the migrations are then applied to your database. Like so:

$ make production/deploy/api

rsync -rP --delete ./bin/linux_amd64/api greenlight@"161.35.71.158":~

api

7,618,560 100% 119.34kB/s 0:01:02 (xfr#1, to-chk=13/14)

rsync -rP --delete ./migrations greenlight@"161.35.71.158":~

sending incremental file list

migrations/

migrations/000001_create_movies_table.down.sql

28 100% 27.34kB/s 0:00:00 (xfr#2, to-chk=11/14)

migrations/000001_create_movies_table.up.sql

286 100% 279.30kB/s 0:00:00 (xfr#3, to-chk=10/14)

migrations/000002_add_movies_check_constraints.down.sql

198 100% 193.36kB/s 0:00:00 (xfr#4, to-chk=9/14)

migrations/000002_add_movies_check_constraints.up.sql

289 100% 282.23kB/s 0:00:00 (xfr#5, to-chk=8/14)

migrations/000003_add_movies_indexes.down.sql

78 100% 76.17kB/s 0:00:00 (xfr#6, to-chk=7/14)

migrations/000003_add_movies_indexes.up.sql

170 100% 166.02kB/s 0:00:00 (xfr#7, to-chk=6/14)

migrations/000004_create_users_table.down.sql

27 100% 26.37kB/s 0:00:00 (xfr#8, to-chk=5/14)

migrations/000004_create_users_table.up.sql

294 100% 287.11kB/s 0:00:00 (xfr#9, to-chk=4/14)

migrations/000005_create_tokens_table.down.sql

28 100% 27.34kB/s 0:00:00 (xfr#10, to-chk=3/14)

migrations/000005_create_tokens_table.up.sql

203 100% 99.12kB/s 0:00:00 (xfr#11, to-chk=2/14)

migrations/000006_add_permissions.down.sql

73 100% 35.64kB/s 0:00:00 (xfr#12, to-chk=1/14)

migrations/000006_add_permissions.up.sql

452 100% 220.70kB/s 0:00:00 (xfr#13, to-chk=0/14)

ssh -t greenlight@"161.35.71.158" 'migrate -path ~/migrations -database $GREENLIGHT_DB_DSN up'

1/u create_movies_table (11.782733ms)

2/u add_movies_check_constraints (23.109006ms)

3/u add_movies_indexes (30.61223ms)

4/u create_users_table (39.890662ms)

5/u create_tokens_table (48.659641ms)

6/u add_permissions (58.23243ms)

Connection to 161.35.71.158 closed.

Let’s quickly connect to our droplet and verify that everything has worked.

$ make production/connect ssh greenlight@'161.35.71.158' Welcome to Ubuntu 20.04.2 LTS (GNU/Linux 5.4.0-65-generic x86_64) ... greenlight@greenlight-production:~$ ls -R .: api migrations ./migrations: 000001_create_movies_table.down.sql 000001_create_movies_table.up.sql 000002_add_movies_check_constraints.down.sql 000002_add_movies_check_constraints.up.sql 000003_add_movies_indexes.down.sql 000003_add_movies_indexes.up.sql 000004_create_users_table.down.sql 000004_create_users_table.up.sql 000005_create_tokens_table.down.sql 000005_create_tokens_table.up.sql 000006_add_permissions.down.sql 000006_add_permissions.up.sql

So far so good. We can see that the home directory of our greenlight user contains the api executable binary and a folder containing the migration files.

Let’s also connect to the database using psql and verify that the tables have been created by using the \dt meta command. Your output should look similar to this:

greenlight@greenlight-production:~$ psql $GREENLIGHT_DB_DSN

psql (12.6 (Ubuntu 12.6-0ubuntu0.20.04.1))

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

greenlight=> \dt

List of relations

Schema | Name | Type | Owner

--------+-------------------+-------+------------

public | movies | table | greenlight

public | permissions | table | greenlight

public | schema_migrations | table | greenlight

public | tokens | table | greenlight

public | users | table | greenlight

public | users_permissions | table | greenlight

(6 rows)

Running the API

While we’re connected to the droplet, let’s try running the api executable binary. We can’t listen for incoming connections on port 80 because Caddy is already using this, so we’ll listen on the unrestricted port 4000 instead.

If you’ve been following along, port 4000 on your droplet should currently be blocked by the firewall rules, so we’ll need to relax this temporarily to allow incoming requests. Go ahead and do that like so:

greenlight@greenlight-production:~$ sudo ufw allow 4000/tcp Rule added Rule added (v6)

And then start the API with the following command:

greenlight@greenlight-production:~$ ./api -port=4000 -db-dsn=$GREENLIGHT_DB_DSN -env=production time=2023-09-10T10:59:13.722+02:00 level=INFO msg="database connection pool established" time=2023-09-10T10:59:13.722+02:00 level=INFO msg="starting server" addr=:4000 env=development

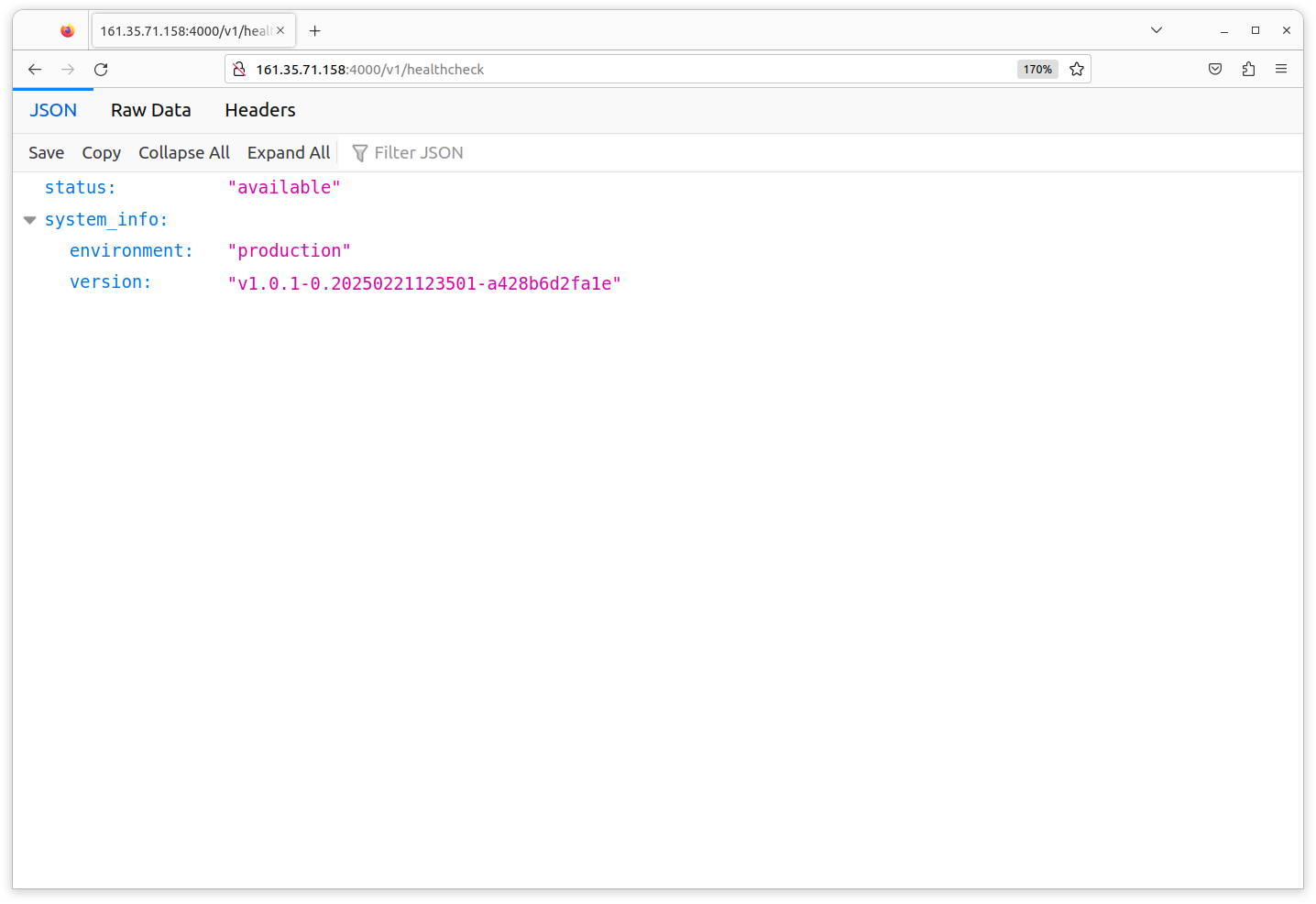

At this point you should be able to visit http://<your_droplet_ip>:4000/v1/healthcheck in your web browser and get a successful response from the healthcheck endpoint, similar to this:

Lastly, head back to your SSH terminal window and press Ctrl+C to stop the API from running on your droplet. You should see it gracefully shut down like so:

greenlight@greenlight-production:~$ /home/greenlight/api -port=4000 -db-dsn=$GREENLIGHT_DB_DSN -env=production time=2023-09-10T10:59:13.722+02:00 level=INFO msg="database connection pool established" time=2023-09-10T10:59:13.722+02:00 level=INFO msg="starting server" addr=:4000 env=development ^Ctime=2023-09-10T10:59:14.722+02:00 level=INFO msg="shutting down server" signal=terminated time=2023-09-10T10:59:14.722+02:00 level=INFO msg="completing background tasks" addr=:4000 time=2023-09-10T10:59:18.722+02:00 level=INFO msg="stopped server" addr=:4000